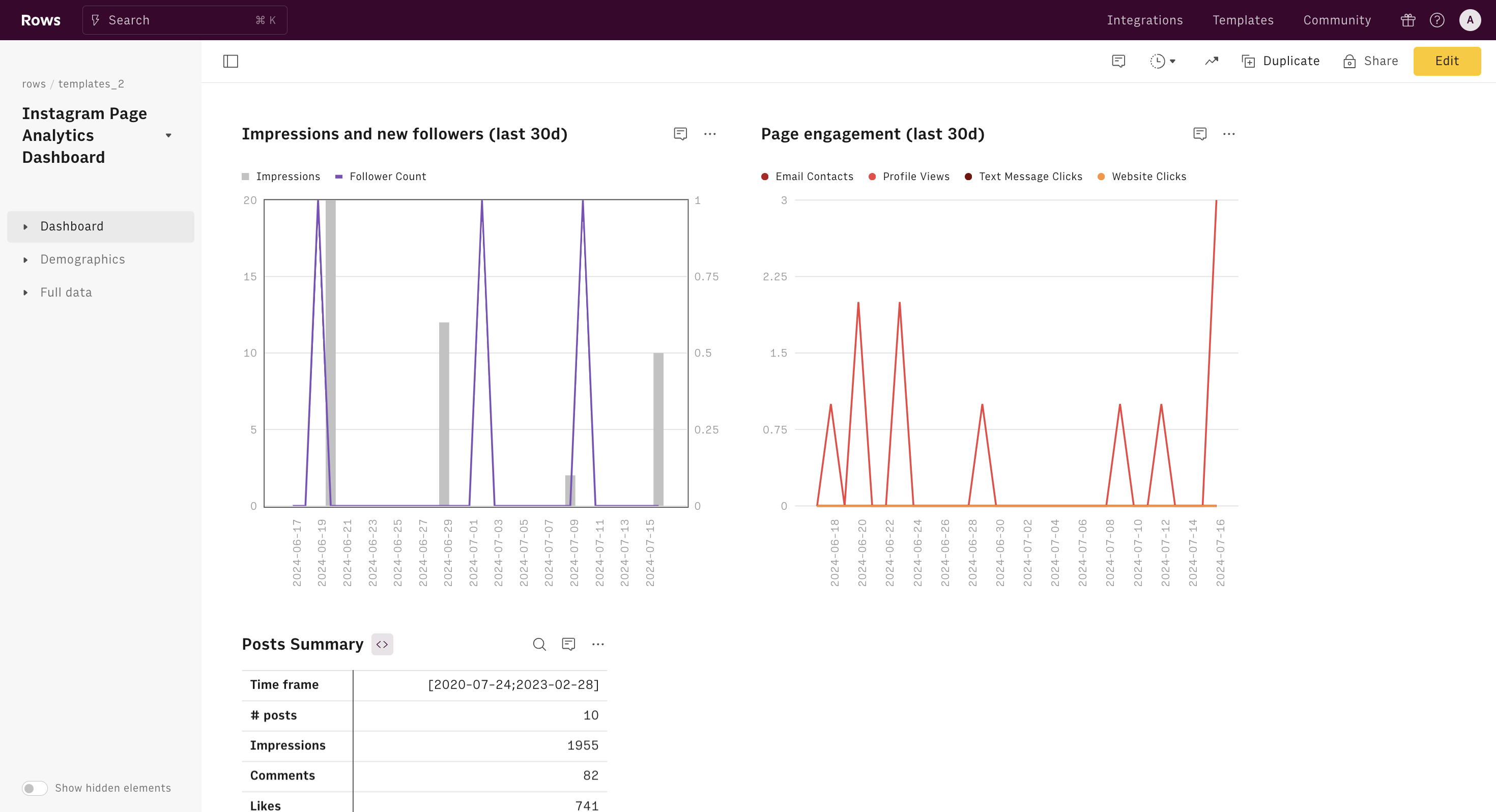

The LLM API price calculator is a versatile tool designed to help users estimate the cost of using various AI services from providers like OpenAI, Google, Anthropic, Meta, and Groq. These services include access to different language models that can perform tasks such as text generation, summarization, translation, and more. Pricing is typically based on the number of tokens processed, where a token can range from one character to one word. Below, you can compare the token input and output prices for different models.

See here how tokens are calculated, and below the comparison between the token input and output prices for different models.

Model | Input (1M tokens) | Output (1M tokens) |

|---|---|---|

groq - Llama 3 70B (8K Context Length) | $0.59 | $0.79 |

groq - Llama 3 8B (8K Context Length) | $0.05 | $0.10 |

groq - Mixtral 8x7B SMoE (32K Context Length) | $0.24 | $0.24 |

groq - Gemma 7B (8K Context Length) | $0.10 | $0.10 |

gpt-4o | $5.00 | $15.00 |

gpt-4-turbo | $10.00 | $30.00 |

gpt-4 | $30.00 | $60.00 |

Gemini 1.5 flash (up to 128k tokens) | $0.35 | $0.53 |

Gemini 1.5 flash (>128k tokens) | $0.70 | $1.05 |

Gemini 1.5 Pro (up to 128k tokens) | $3.50 | $10.50 |

Gemini 1.5 Pro (up to 128k tokens) | $7.00 | $21 |

Claude - Opus | $15.00 | $75.00 |

Claude - Sonnet | $3.00 | $15.00 |

Claude - Haiku | $0.25 | $1.25 |

open-mistral-7b | $0.25 | $0.25 |

open-mixtral-8x7b | $0.70 | $0.70 |

open-mixtral-8x22b | $2.00 | $2.00 |

mistral-small | $1.00 | $3.00 |

mistral-medium | $2.70 | $8.10 |

mistral-large | $4.00 | $12.00 |

The calculator provides a clear understanding of potential expenses, making it particularly useful for businesses and developers who need to accurately budget their AI-related expenditures. Users can input several parameters: the choice of model, the number of input and output tokens, and a multiplier to forecast future token usage.

The components of the AI API price calculator include:

Prompt: Paste your prompt into the input field. The calculator will automatically compute the number of tokens in your prompt.

Model Selection: Users choose which model they plan to use from providers such as OpenAI (e.g., GPT-4, GPT-4-8K, GPT-4-32K), Google Gemini, Anthropic, Meta, and Groq, each having different pricing rates per token.

Input or Output: Select whether you want to estimate the cost for the input tokens to the model or the output tokens from the model. Different models have varying costs for input and output tokens.

Multiplier: This factor is used to estimate the number of tokens required over a specified period. It helps forecast future usage and scale the estimated cost accordingly.