What Is a Robots.txt Generator?

Our robots.txt generator simplifies the creation of a crucial file for your website. A robots.txt file, placed at your site's root, instructs search engine crawlers on which parts of your site to index and which to ignore. This tool is essential for:

Improving your site's SEO by controlling indexed content

Protecting sensitive data from unwanted exposure

Reducing server load by limiting unnecessary crawler access

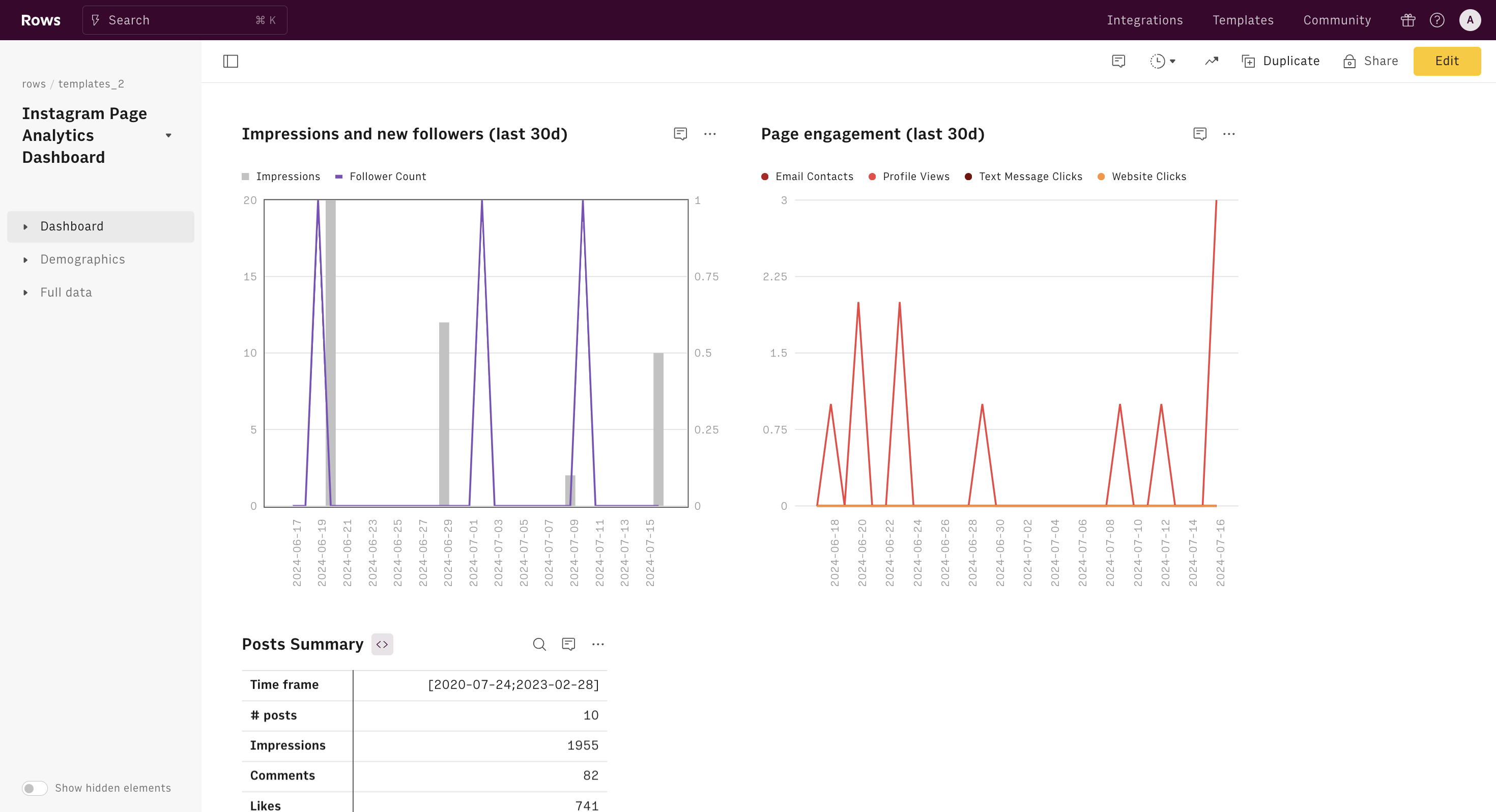

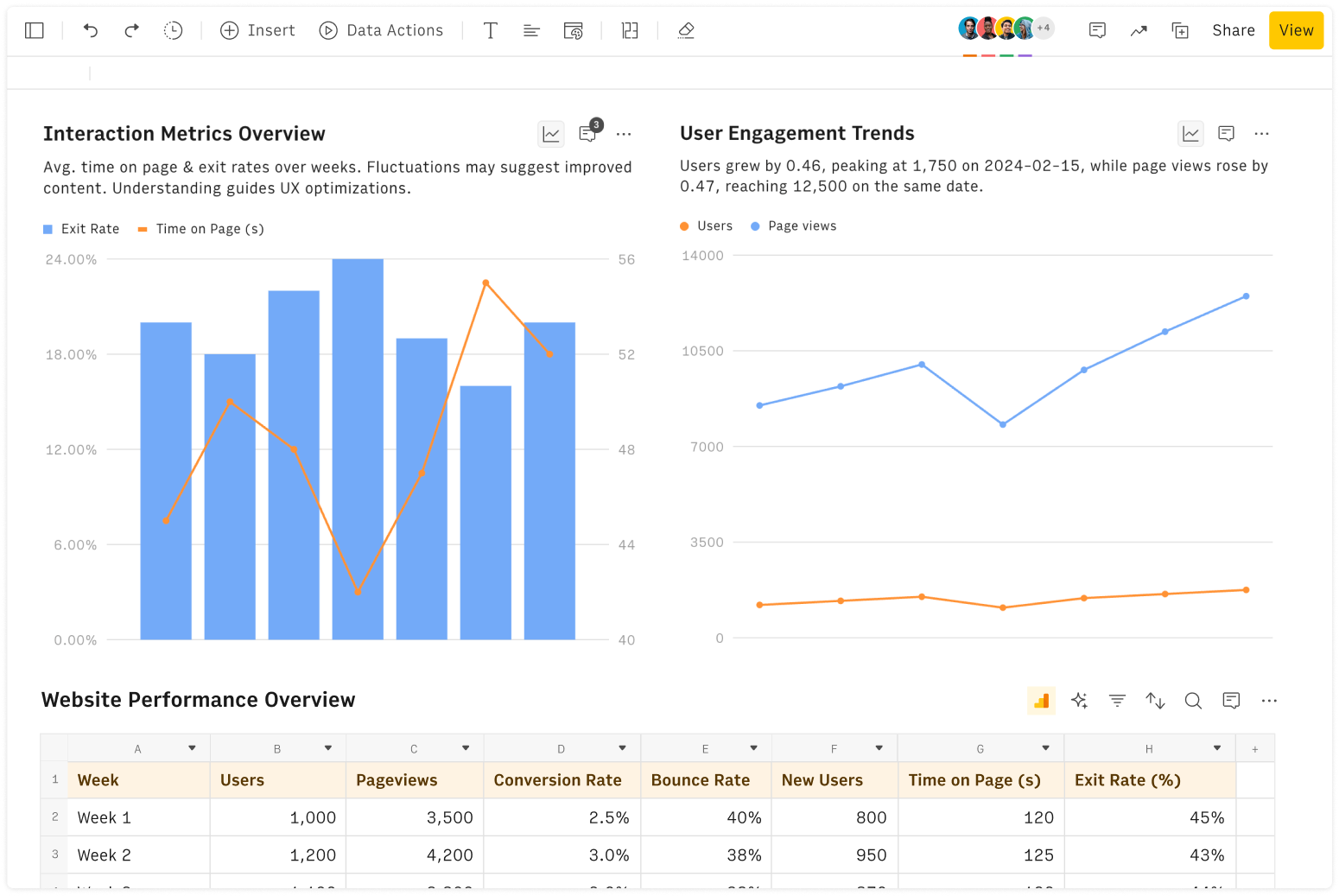

This robots.txt is build with Rows and leverages Rows' native AI capabilities to generate content based on input. Discover more.

How to Use the Robots.txt Builder

Creating an effective robots.txt file is straightforward with our builder. Configure the following parameters:

Robots Permissions

Choosing between "Allow all" and "Deny all" robots is a critical decision that affects your site's visibility:

Allow all: This option permits all search engine crawlers to access and index your website. It's ideal for most public websites aiming to maximize their online presence.

Example:

User-agent: * Allow: /

Deny all: This setting blocks all crawlers from accessing your site. It's useful for:

Development or staging environments

Private intranets

Websites not ready for public viewing

Example:

User-agent: * Disallow: /

Insight: While "Allow all" is common, consider a hybrid approach. You might allow most content but restrict specific areas, like administrative pages or user accounts.

Crawl-Delay

The Crawl-Delay directive helps manage your server's resources by controlling how quickly bots can crawl your site:

Example:

User-agent: * Crawl-delay: 5

This tells all bots to wait 5 seconds between requests.

Insights:

Smaller sites can often handle faster crawling (1-5 seconds)

Larger, more complex sites might need longer delays (10+ seconds)

Different crawlers can be assigned different delays:

User-agent: Googlebot Crawl-delay: 5

User-agent: Bingbot Crawl-delay: 10

Sitemap

Including your sitemap URL helps search engines discover all your pages efficiently:

Example:

Sitemap: https://www.yourwebsite.com/sitemap.xml

Insights:

You can list multiple sitemaps if needed

Update your sitemap regularly as your site content changes

Consider using dynamic sitemap generators for large, frequently updated sites

Restricted Directories

Blocking specific directories helps focus crawlers on your most important content:

Example:

User-agent: * Disallow: /admin/ Disallow: /private/ Disallow: /temp/

Insights:

Block directories containing:

Duplicate content (e.g., print-friendly versions)

User-specific content (e.g., /user/)

Temporary or test pages

You can use wildcards for more complex rules:

Disallow: /*.pdf$

This blocks all PDF files.

Consider allowing search engines to crawl your CSS and JavaScript files to help them understand your site's layout:

Allow: /*.js$Allow: /*.css$

Remember, while robots.txt provides guidelines, it's not a security measure. Sensitive information should be protected through other means, such as password protection or IP restrictions.

After configuration, copy the generated content to a .txt file and upload it to your website's root directory. Validate the file to ensure proper functionality.

Best Practices for Creating robots.txt Files

Enhance your robots.txt file's effectiveness with these tips:

Place the file in the root directory (e.g., example.com/robots.txt)

Keep the file concise and simple to minimize errors

Use "#" for comments to explain rules

Avoid listing sensitive directories explicitly

Monitor crawler activity through web server logs

Include a link to your XML sitemap

Stay informed about web crawler behavior updates

Consult search engine guidelines for specific recommendations

Practical Applications in SEO

An effective robots.txt file is crucial for the success of your SEO efforts. In particular, it helps with the following:

Exclude Duplicate Content

Preventing crawlers from indexing duplicate pages is crucial for maintaining strong rankings and avoiding potential penalties.

Examples:

E-commerce site with multiple product categories:

User-agent: * Disallow: /category/shoes/sneakers Disallow: /category/footwear/sneakers

This prevents indexing of the same products under different category paths.

Printable versions of articles:

User-agent: * Disallow: /print/

This blocks indexing of printer-friendly pages that duplicate main article content.

Prioritize Important Pages

Directing crawlers to focus on your most valuable content increases the likelihood of high search result rankings.

Example:

User-agent: * Allow: /blog/ Allow: /products/ Disallow: / Sitemap: https://www.example.com/sitemap_index.xml

This configuration allows crawling of the blog and products sections while blocking other areas. The sitemap helps crawlers find and prioritize key pages within these allowed sections.

Protect Sensitive Information

Shielding confidential data and user-generated content from search engine results is vital for privacy and security.

Examples:

Blocking admin and user areas:

User-agent: * Disallow: /admin/ Disallow: /user/ Disallow: /account/

Preventing indexing of internal search results:

User-agent: * Disallow: /search?

This stops search engines from indexing dynamic search result pages, which often contain low-value or duplicate content.

Optimize Crawl Budget

Ensuring crawlers use their allocated time efficiently by focusing on your most relevant pages is key to effective SEO.

Examples:

Large e-commerce site:

User-agent: * Allow: /products/ Allow: /categories/ Allow: /blog/ Disallow: /products/out-of-stock/ Disallow: /products/discontinued/ Disallow: /internal-search? Crawl-delay: 2 Sitemap: https://www.example.com/sitemap.xml

This configuration:

Allows crawling of key sections (products, categories, blog)

Prevents crawling of out-of-stock or discontinued products

Blocks internal search results

Sets a reasonable crawl delay

Provides a sitemap for efficient discovery of important pages

News website:

User-agent: * Allow: /news/ Allow: /opinion/ Disallow: /archives/ Disallow: /print/ Disallow: /tags/ Crawl-delay: 1 Sitemap: https://www.newssite.com/news-sitemap.xml

This setup:

Prioritizes current news and opinion pieces

Blocks older archived content and print versions

Prevents crawling of tag pages, which often create duplicate content

Sets a faster crawl rate for timely content updates

Provides a news-specific sitemap for quick indexing of fresh content

By leveraging our robots.txt generator and following these guidelines, you'll enhance your website's visibility and performance in search results while maintaining control over your content's accessibility.